Top‑1 Routing and Expert Pruning Slash MoE FFN Compute by 50%

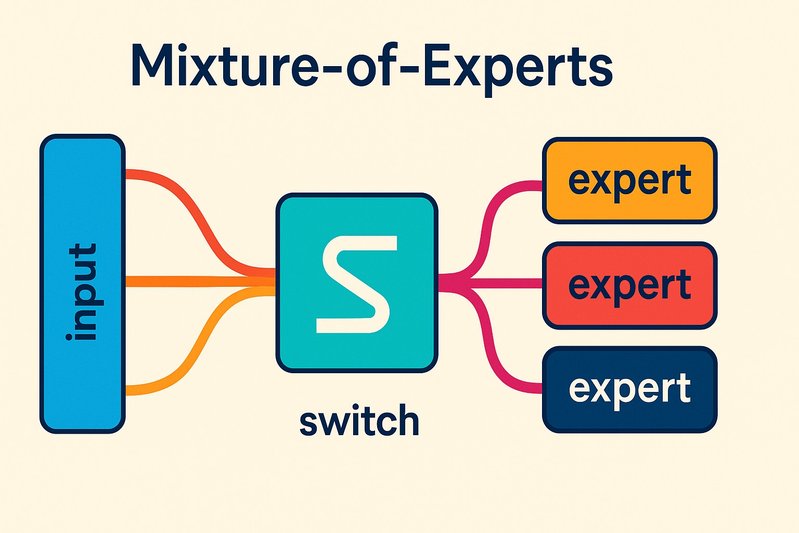

A single switch from top‑2 to top‑1 routing can nearly halve expert FFN FLOPs in mixture‑of‑experts (MoE) transformers—yet many teams fail to see the speedup in production because stragglers, padding, and imbalanced scheduling claw back the theoretical win. With the right routing math, capacity tuning, and MoE‑aware runtimes, the architectural sparsity turns into stable tokens/s.

Why now: state‑of‑the‑art open models like Mixtral and DeepSeek‑MoE have made MoE commonplace, and practical guides from DeepSpeed‑MoE and MegaBlocks show how to route and schedule experts without melting throughput. This article demonstrates how top‑k reduction and expert pruning cut compute, what breaks when you change routing, and how kernels and schedulers restore balance.

We’ll walk through: where MoE FLOPs go (spoiler: expert FFNs dominate), the exact 2× FFN reduction from top‑2 → top‑1 and the communication paths it removes, tuning capacity factors and auxiliary losses to prevent routing collapse, utilization‑driven expert pruning, runtime mechanics in DeepSpeed‑MoE and MegaBlocks for stable throughput, quality recovery via light LoRA, and a hands‑on checklist with code to implement and verify the changes.

Architecture/Implementation Details

Where the FLOPs actually go in MoE

In MoE Transformer blocks, attention stays dense while the MLP/FFN becomes sparse via experts. Per token, routing selects k experts; each selected expert applies its FFN. With k=2, you pay two FFN passes per token; with k=1, you pay one. Because FFNs dwarf attention FLOPs in many decoder settings, reducing k from 2 to 1 cuts expert FFN compute by roughly 50% and also reduces cross‑device traffic when experts are sharded.

From top‑2 to top‑1: gating math and paths removed

Let x be a token representation, W_r the router projection producing logits g = W_r x. The router selects the top‑k indices and applies a softmax over those k entries to produce mixture weights p. With k ∈ {1,2}:

# Pseudocode for MoE gating

logits = router(x) # [num_experts]

indices = topk(logits, k) # k = 1 (top‑1) or 2 (top‑2)

weights = softmax(logits[indices]) # mixture over selected experts

outputs = sum_j weights[j] * expert_ffn[indices[j]](x)Operationally, top‑2 introduces two expert dispatches per token, often via two all‑to‑all collectives: tokens are partitioned by expert, sent to devices hosting those experts, processed, and returned. Top‑1 removes one dispatch and halves the FFN invocations. It also reduces padding: within each expert’s micro‑batch, fewer tokens means fewer idle slots when batches are capacity‑bounded.

Communication paths simplified by top‑1:

- Fewer all‑to‑all phases and smaller payloads per step.

- Reduced expert‑inbound/outbound queues to drain.

- Less padding per expert micro‑batch when capacity is respected.

These effects compound with MoE‑aware schedulers that coalesce work and mitigate stragglers.

Capacity factor and auxiliary loss: preventing routing collapse

Switch‑style MoE uses a capacity factor C to limit tokens per expert: capacity = floor(C × tokens_per_batch / num_experts). Tokens beyond capacity are either dropped (train‑time) or rerouted, and a load‑balancing auxiliary loss encourages the router to distribute tokens evenly while preserving high‑probability assignments. After reducing k, two things commonly break:

- Collapse: the router over‑concentrates on a few experts, saturating capacity and causing overflow/padding.

- Underutilization: some experts go cold, hurting specialization and quality.

Mitigations:

- Increase the load‑balancing loss coefficient and warm‑start from a checkpoint that already uses that aux loss.

- Re‑tune C. With top‑1, slightly raising C (e.g., 1.0 → 1.2) provides headroom to avoid overflow at short horizons; at long contexts, you may lower C to reduce padding.

- Apply a short router‑only or LoRA‑on‑router recovery run to re‑spread traffic.

Expert pruning via utilization histograms

Once routing is stable under top‑1, profile expert utilization over representative workloads. Compute the fraction of tokens (or weighted tokens by gate score) handled per expert. Experts below a threshold (e.g., <0.5–1.0% over long windows) are candidates for pruning. After removal, re‑balance the router and run a light recovery to re‑center specialization.

Key safeguards:

- Use long traces and diverse prompts to avoid pruning experts that serve rare but essential niches.

- Prefer staged pruning with small batches of experts removed at a time, with validation between stages.

Throughput pitfalls: stragglers, padding, imbalance

Routing changes often surface hidden inefficiencies:

- Stragglers: one heavily loaded expert elongates the step while others idle.

- Padding: fixed‑shape kernels pad to capacity; uneven token‑to‑expert assignment magnifies wasted slots.

- Communication skew: imbalanced all‑to‑all traffic produces tail latency.

MegaBlocks tackles these by regrouping micro‑batches into balanced blocks, reducing padding and smoothing device‑level load; it remains effective under skewed distributions and post‑pruning expert sets. DeepSpeed‑MoE provides runtime knobs for capacity, overflow policies, and overlapping communication/compute that stabilize p50/p99 under top‑1.

MoE‑aware runtimes: DeepSpeed‑MoE and MegaBlocks

- DeepSpeed‑MoE: a production‑ready engine with top‑k gating, capacity factor controls, load‑balancing losses, and expert parallel sharding. It overlaps all‑to‑all with expert compute and exposes configuration for routing, capacity, and expert partitioning.

- MegaBlocks: a kernel and scheduling approach that partitions computation into uniform blocks, limiting padding and mitigating stragglers. It provides robust throughput when routing skew or expert pruning disrupts uniformity.

Both approaches are compatible with Mixtral‑style MoE and DeepSeek‑MoE training/inference pipelines and play well with router/aux‑loss formulations derived from Switch Transformers.

Implementation notes across GPU stacks without touching dense kernels

- CUDA/NVIDIA: If your attention/MLP dense paths already use fused kernels, you can adopt top‑1 and expert pruning without modifying dense kernels. Focus on MoE dispatch kernels and all‑to‑all overlap in DeepSpeed‑MoE, or swap in MegaBlocks for block‑grouped expert compute.

- Triton/ROCm: MegaBlocks compiles via Triton and runs on ROCm, giving AMD users a portable path to realize MoE routing gains without re‑writing dense kernels.

- Mixed environments: Keep dense kernels intact; route changes happen at the MoE dispatch/aggregation boundary. Validate collective performance and memory layout alignment, not attention/FFN math itself.

Maintaining quality after structural changes

Top‑1 and expert pruning alter specialization. Light recovery stabilizes metrics:

- LoRA on the router (and optionally expert feed‑forwards) for a few thousand steps often suffices to restore utilization balance and recapture 0.5–2 points on common evals, at a fraction of full fine‑tuning cost.

- Brief knowledge distillation from the pre‑pruning model can further preserve rare‑pattern experts’ knowledge after pruning.

Comparison Tables

Routing and pruning choices at a glance

| Approach | Compute effect | Communication effect | Primary risks | Best mitigations | Supporting runtime |

|---|---|---|---|---|---|

| Top‑2 (baseline) | 2× expert FFN per token | Two all‑to‑all dispatches | Higher FLOPs, padding | N/A (baseline) | Any MoE runtime |

| Top‑1 | ~50% fewer expert FFN FLOPs | One dispatch removed; smaller payloads | Routing collapse; cold experts | Tune aux loss, adjust C, short router recovery | DeepSpeed‑MoE, MegaBlocks |

| Expert pruning | Reduces parameter count and active experts | Fewer destinations; can heighten skew | Over‑pruning hurts specialization | Utilization histograms, staged pruning, LoRA recovery | DeepSpeed‑MoE, MegaBlocks |

Runtime mechanics: what stabilizes throughput

| Runtime | Key mechanism | Strengths under top‑1/pruning | Notes |

|---|---|---|---|

| DeepSpeed‑MoE | Overlapped all‑to‑all + expert compute; capacity/aux‑loss knobs | Production‑ready configs; easy router tuning | Integration across training/inference pipelines |

| MegaBlocks | Block‑grouped scheduling and compute | Mitigates padding/stragglers, robust to skew | Triton kernels, portable to ROCm |

Best Practices

- Start with top‑1, then prune: First stabilize routing at k=1, then prune cold experts based on long‑horizon utilization.

- Tune the router like a first‑class module:

- Increase load‑balancing loss weight after changing k; monitor entropy and per‑expert traffic.

- Re‑tune capacity factor C by batch size and sequence length; favor slightly higher C for short sequences to avoid overflow, lower C for long sequences to reduce padding.

- Use MoE‑aware runtimes:

- Enable DeepSpeed‑MoE’s overlapping all‑to‑all and capacity enforcement; or adopt MegaBlocks to reduce padding and stragglers.

- Profile before pruning:

- Record expert assignment histograms and per‑expert latency heatmaps under production‑like traffic; prune only persistently cold experts.

- Recover lightly:

- Apply LoRA to router/expert FFNs for a short recovery; optionally add small‑mix distillation to re‑center specialization.

- Verify long‑context behavior: Some experts capture rare long‑range patterns; include long‑context evals when pruning.

Practical Examples

1) DeepSpeed‑MoE configuration for top‑1 and capacity tuning

{

"moe": {

"enabled": true,

"num_experts": 16,

"top_k": 1,

"capacity_factor": 1.25,

"router_aux_loss_coef": 0.01,

"router_topk": true,

"moe_param_group": true,

"drop_tokens": false,

"alltoall": { "overlap_communication": true }

}

}- top_k: switch from 2 → 1 to halve expert FFN compute.

- capacity_factor: start slightly above 1.0 to prevent overflow at short contexts; re‑tune per workload.

- router_aux_loss_coef: raise modestly after top‑k change to prevent collapse.

- overlap_communication: keep GPUs busy during all‑to‑all.

2) Utilization histogram to drive expert pruning

# PyTorch‑like snippet capturing routing stats during eval

util = torch.zeros(num_experts, device="cuda")

with torch.no_grad():

for batch in loader:

logits = router(batch.hiddens) # [B, num_experts]

idx = torch.topk(logits, k=1, dim=-1).indices.squeeze(-1) # top‑1

util.index_add_(0, idx.flatten(), torch.ones_like(idx.flatten(), dtype=util.dtype))

util = util / util.sum() # fraction of tokens per expert

cold = (util < 0.005).nonzero().flatten() # e.g., <0.5%

print("Prune candidates:", cold.tolist())- Run over diverse, long traces. Confirm with per‑expert latency heatmaps.

- Prune in stages; after each stage, run a short LoRA recovery on router and affected experts.

3) Router‑only LoRA for quick recovery

from peft import LoraConfig, get_peft_model

lora_cfg = LoraConfig(

r=8, lora_alpha=16, target_modules=["router.proj"], lora_dropout=0.05

)

model.router = get_peft_model(model.router, lora_cfg)

# Fine‑tune for a few K steps on mixed tasks to re‑center specialization- Focus on router first; expand to expert FFNs selectively if specialization drifted.

Conclusion

Top‑1 routing is the cleanest lever to slash MoE FFN compute by about half, but the win only materializes end‑to‑end when you manage the router and scheduler as first‑class systems. Capacity factor and load‑balancing losses keep utilization even; utilization histograms identify experts that can be safely pruned; and MoE‑aware runtimes like DeepSpeed‑MoE and MegaBlocks convert architectural sparsity into stable tokens/s by taming padding, stragglers, and communication skew. A final touch of LoRA helps recover specialization after structural changes.

Key takeaways:

- Top‑2 → top‑1 removes one expert pass and an all‑to‑all leg per token, halving expert FFN FLOPs and cutting cross‑device traffic.

- Capacity and aux‑loss tuning prevent routing collapse; monitor utilization and entropy as first‑class metrics.

- Prune only persistently cold experts, guided by long‑horizon histograms; recover lightly with LoRA.

- DeepSpeed‑MoE and MegaBlocks stabilize throughput by overlapping communication and reducing padding/stragglers, even under skew and pruning.

Next steps:

- Flip to top‑1 in a staging environment, tune capacity and aux‑loss, and validate utilization and p99.

- Collect histograms over real traffic; stage expert pruning with checks between rounds.

- Adopt MoE‑aware runtime features (overlap all‑to‑all or block scheduling) and add a brief LoRA recovery.

The upshot: with disciplined routing and scheduling, MoE’s theoretical sparsity becomes real‑world throughput—without rewriting your dense kernels ⚙️.

Sources

- Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity — https://arxiv.org/abs/2101.03961 — Establishes top‑k gating, capacity factors, and auxiliary load‑balancing losses that underlie top‑1 routing stability.

- Mixtral of Experts — https://arxiv.org/abs/2401.04088 — Demonstrates practical MoE architectures and discusses routing behaviors and specialization considerations relevant to top‑k changes and pruning.

- DeepSeek‑MoE (repository) — https://github.com/deepseek-ai/DeepSeek-MoE — Shows real‑world gating controls and expert management used when applying routing adjustments and pruning.

- MegaBlocks: Efficient Sparse Mixture‑of‑Experts Training and Inference — https://arxiv.org/abs/2211.15841 — Provides block‑grouped scheduling/kernels that mitigate padding, stragglers, and imbalance under skewed/pruned expert loads.

- DeepSpeed‑MoE Tutorial — https://www.deepspeed.ai/tutorials/moe/ — Documents runtime mechanics, capacity/aux‑loss tuning, and overlapping communication/compute that stabilize throughput under top‑1.

- AMD ROCm Documentation — https://rocm.docs.amd.com/ — Confirms Triton/ROCm pathways for deploying MegaBlocks‑style kernels and MoE on AMD without rewriting dense kernels.

- LoRA: Low‑Rank Adaptation of Large Language Models — https://arxiv.org/abs/2106.09685 — Supports light adapter fine‑tuning to recover quality after routing/pruning.

- AdaLoRA: Adaptive Budget Allocation for Parameter‑Efficient Fine‑Tuning — https://arxiv.org/abs/2303.10512 — Provides an adapter‑efficient alternative for recovery and re‑centering specialization post‑pruning.