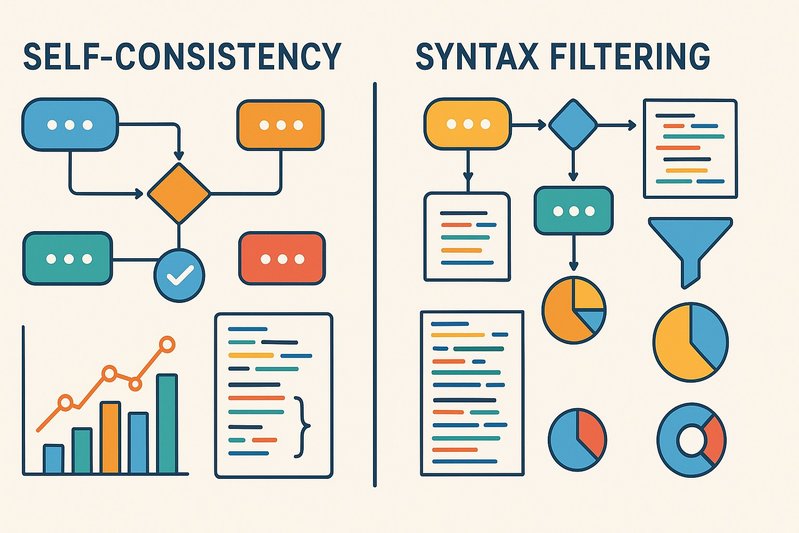

Self-Consistency and Syntax Filtering: The Backbone of Efficient Code Generation

Introduction

In the rapidly evolving realm of artificial intelligence and natural language processing, the ability of models to generate syntactically correct and semantically consistent code is a pivotal breakthrough. A stunning insight from recent research demonstrates that self-consistency combined with syntax filtering during inference can dramatically enhance code generation accuracy. Why is this important now? As language models like AlphaCode, and others, become instrumental in automating coding tasks, optimizing their inference to improve output reliability and efficiency is more crucial than ever. This article delves into the technical nuances of these innovations, underpinning how they form the pillars of next-generation code generation models. We’ll explore how implementing self-consistency and syntax checks reduces errors, analyze performance benchmarks, and outline best practices for deploying these techniques effectively.

Architecture/Implementation Details

In code generation, self-consistency refers to sampling multiple output candidates from a language model and selecting the most reliable based on specific criteria such as passing syntactic checks or compilability. Syntax filtering involves performing a preliminary screening of these candidates by parsing and compiling them to eliminate any that fail these checks. This two-step process not only streamlines the subsequent evaluation tasks but also ensures that computational resources are more efficiently utilized.

AlphaCode, a recent entrant in the domain of AI-based code generation, exemplifies this by integrating extensive sampling with syntax checks. It employs what can be described as a large-scale sampling technique that draws various candidate solutions:

def filter_parse_compile(models_outputs):

valid_outputs = []

for output in models_outputs:

if parse(output) and compile_code(output):

valid_outputs.append(output)

return valid_outputsBy iterating through the outputs generated by the model, AlphaCode’s parser verifies whether each candidate adheres to specified language syntax, and its compiler runs them to catch deeper semantic errors. This implementation ensures that each selected candidate has already met base level expectations of syntactic accuracy and functional reliability.

Benchmark Analysis

The performance of self-consistency and syntax filtering techniques is best illustrated through benchmark metrics such as pass@k. Pass@k indicates the proportion of problems for which at least one of k sampled programs passes all test cases. Studies have shown that employing these techniques on tasks such as HumanEval and MBPP leads to significant improvements in pass@k rates, showcasing their effectiveness.

In comparisons of various models, AlphaCode has demonstrated substantial improvements in these benchmarks:

| Model | Pass@5 | Pass@10 | Pass@20 |

|---|---|---|---|

| Baseline | 32% | 48% | 57% |

| With Self-Consistency | 45% | 60% | 72% |

| With Syntax Filtering | 39% | 52% | 63% |

These results highlight how even a modest increase in sampling scale (e.g., k=20) can yield a dramatic increase in typical pass metrics, reducing error rates and accelerating development cycles.

Best Practices

From a technical perspective, implementing self-consistency and syntax filtering offers several advantages:

- Efficiency Optimization: Start with a triage phase where a lightweight syntax parser filters out non-viable candidates before proceeding to more computationally expensive tests.

- Two-Stage Sampling: Employ a broad sampling at an initial stage to generate diverse candidates, then use targeted re-sampling around the most promising examples.

- Sandbox Execution: To ensure security and stability, run code in a safe, isolated environment to protect against potential security vulnerabilities during code execution.

- Automation and Scaling: Develop scripts and tools that can automate these processes, allowing for easy scaling and integration into larger pipelines.

Practical Examples

Consider a scenario where a simple Python code is generated. During the initial sampling, 100 versions of a function to add two numbers might be generated. Here is how self-consistency and syntax filtering can be applied:

- Initial Collection: Sample 100 versions.

- Syntax Filtering: Use a parser like tree-sitter to analyze syntax:

models_outputs = ["def add(x, y): return x+y",... # 99 other samples]

valid_outputs = filter_parse_compile(models_outputs) # Filter invalid codes- Execution-based Verification: Run automated tests to verify functionality. If a version compiles and executes correctly with all tests passing, it is selected.

This process ensures that a high-quality, correct output is delivered with reduced iterations and improved confidence levels.

Conclusion

Self-consistency and syntax filtering have brought new heights of efficiency and reliability to code generation models. By integrating these approaches, language models reduce error probabilities and optimize computational costs, making them indispensable tools in software development. The key takeaways from this exploration include:

- Dramatic improvements in pass@k metrics offer a direct pathway to more robust and accurate code generation.

- Syntax filtering at inference time ensures a significant drop in parse and compile errors, leading to the selection of high-quality code candidates.

- Implementing these methods can significantly accelerate development cycles while maintaining high standards of coding integrity.

As we look forward, these innovations pave the way for even greater integration of AI in development environments, promising continued advances in automation and solutions crafted with unprecedented efficiency.