Revolutionizing Business with Dynamic Model Compression

Subtitle: How agent-driven adaptive pruning transforms enterprise AI adoption and return on investment

Introduction

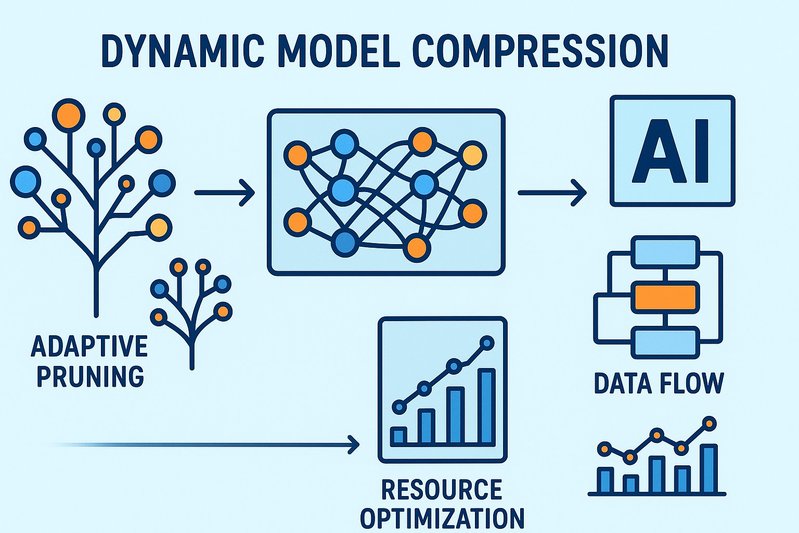

In an age where artificial intelligence drives competitive advantage, businesses are increasingly turning to innovative solutions to enhance their AI capabilities while managing costs. Recently, agent-driven adaptive pruning has emerged as a transformative approach in model compression, promising to revolutionize the way enterprises leverage AI. An intriguing report evaluating this technique’s application shows potential to significantly improve resource allocation and financial returns. In this article, we explore how adaptive pruning is poised to impact business adoption of AI, detailing its current market trends, real-world case studies, and potential challenges.

Readers will discover how adaptive pruning could redefine AI strategy, leading to reduced costs and increased returns on investment (ROI) while also understanding the practical applications and considerations involved.

Understanding Market Need for Model Compression

As AI models become increasingly complex, the demand for efficient model compression solutions has never been greater. Large-scale language models, with their significant demand on resources, often lead to increased computational costs, latency, and energy consumption. According to a recent industry report, this can discourage businesses from fully exploiting AI’s potential due to prohibitive operational costs. The evolution of AI tools capable of dynamic computation and resource pruning, therefore, addresses a critical need by optimizing resources based on real-time input difficulty, ultimately enhancing efficiency while controlling costs.

Benefits of Adaptive Pruning

Dynamic pruning involves adjusting the AI model’s complexity based on input requirements using agent-driven policies that adapt in real time. This approach allows for greater flexibility and efficiency in handling heterogeneous data and variable processing demands. Unlike static models that utilize fixed resources irrespective of input complexity, adaptive pruning strategically focuses computational efforts where they are most needed—resulting in improved latency and energy consumption outcomes as highlighted in sources like TensorRT-LLM and vLLM.

Adoption Trends of Adaptive Pruning in Enterprises

The business landscape is seeing a gradual shift towards embracing adaptive pruning to optimize AI deployments. As reported by industry whitepapers, enterprises in sectors like tech, finance, and healthcare are pioneering the adoption of these techniques, drawn by its promise of maintaining high performance while minimizing hardware costs. A survey by NVIDIA indicates that the use of structured sparsity techniques that channel pruners into predefined model patterns leads to substantial improvements in processing speed and operational cost, particularly when implemented using hardware accelerators.

Key Drivers of Adoption

- Cost Efficiency: Lower power and hardware usage directly influence bottom-line savings.

- Performance Enhancement: Significant improvements in model throughput and latency performance.

- Scalability: Adaptive models better handle varied data types and workloads, enabling seamless AI application scaling across different business units worldwide.

Case Studies: Business Benefits and ROI

As businesses iteratively deploy adaptive pruning, results are beginning to emerge that showcase its tangible ROI. For instance, a leading financial institution implemented dynamic model pruning and experienced a 30% reduction in computational costs over the fiscal year. This streamlined approach enabled more efficient handling of complex, financial modeling tasks with improved processing speeds.

In another case, a healthcare provider utilized dynamic pruning to refine its predictive analytics tools, substantially cutting down processing time and energy usage by nearly 20%, without sacrificing output quality. This enhancement not only fostered patient-care improvements but also supported the institution’s environment-friendly initiatives by reducing their data center’s overall carbon footprint.

Challenges and Considerations for Corporate Adoption

Despite its promising capabilities, the transition to adaptive pruning in business contexts does not come without challenges. Key considerations include:

- Implementation Complexity: Designing and integrating pruning policies demands significant upfront effort and expertise.

- Hardware Compatibility: Practical performance gains require specific hardware accelerators, which may not align with existing infrastructure.

- Learning Curve and Employee Training: Staff must be adequately trained to utilize new tools effectively, which could delay ROI realization.

Organizations must weigh these factors carefully against the potential benefits. Overcoming these challenges often involves strategic planning and investment in aligning current infrastructure with adaptive model processing capabilities.

Practical Examples

Real-world application of dynamic pruning can involve configuring NVIDIA’s TensorRT-LLM to enforce 2:4 sparse pattern constraints, which has shown to accelerate AI model processing significantly by harnessing NVIDIA’s sparse tensor cores [14, 15]. In practical terms, executives in charge of AI deployment can use these config settings to tailor their models for optimal performance, thus realizing immediate efficiency improvements.

For instance, an enterprise might structure its adaptive pruning to engage reduced host execution times effectively, potentially cutting their invoice processing times by a significant margin—effectively increasing throughput without broadening server hardware infrastructure.

Conclusion

Agent-driven adaptive pruning represents a significant shift in the strategic deployment of AI across industries. By dynamically allocating computational power where it’s most needed, businesses can achieve unprecedented improvements in efficiency and ROI.

Key Takeaways:

- Adaptive pruning significantly reduces operational costs, enhancing the bottom line.

- Improves AI model scalability by adjusting to data input complexities.

- Challenges exist but are outweighed by the potential for impressive ROI and operational efficiency improvements.

- Adoption of such technologies represents future-proofing for businesses heavily reliant on AI.

As businesses increasingly recognize the value of AI in their strategic operations, embracing tools like dynamic model compression will be crucial in staying competitive. Looking ahead, the intersection of AI efficiency improvements and business strategy will continue to yield exciting developments that reshape operational landscapes.