Hybrid Edge–Cloud Slashes Biometric Bandwidth Costs by 100× While Scaling to Million-ID Galleries

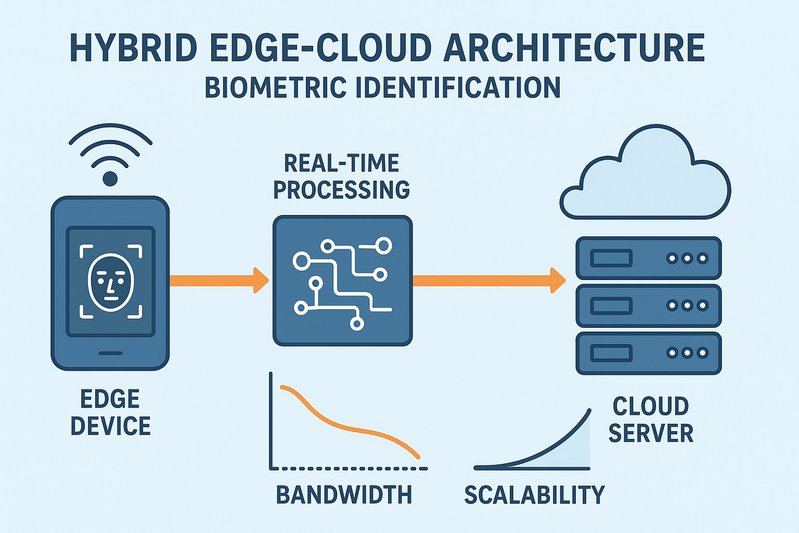

Megabits of video in, kilobytes of data out—that’s the new math for real-time biometric identification at scale. As organizations push identification from pilots to production across multi-site camera fleets, the largest line items aren’t chips or licenses; they’re bandwidth and cloud egress. Architectures that keep detection and embedding on the edge, then ship compact vectors to the cloud for large-scale search, are shrinking data transfer by orders of magnitude while preserving millisecond-level responsiveness. At the same time, million-ID galleries and cross-site analytics are pushing buyers toward hybrid patterns that preserve elasticity without committing to punitive ongoing data movement.

This article presents a CFO-ready view of the total cost of ownership (TCO), the bandwidth economics behind 100× reductions, and the break-even points that separate edge-first, hybrid, and cloud-only deployments. Readers will learn where costs accrue by architecture, how continuous streaming compares to embedding-only uplinks, where hybrid pays off for million-scale watchlists, and how governance frameworks (GDPR/CCPA/BIPA) shape the deployment decision. It closes with use-case segmentation, rollout playbooks, sensitivity analysis, and an executive checklist to select the right architecture by gallery size, concurrency, and policy.

ROI and Cost Drivers by Architecture

CapEx vs elastic OpEx

- Edge on-device and near-edge gateways front-load spend into device CapEx, installation, and on-prem storage. The payoff appears as low ongoing OpEx because bandwidth and cloud compute are minimized.

- Cloud-only shifts spend to elastic OpEx: GPU/CPU instance hours, storage, and data egress. It scales easily for pilots and bursty workloads but becomes a persistent expense under 24/7 ingest from multi-camera sites.

- Hybrid splits the difference: the edge performs detection/embedding (and often liveness), while the cloud runs approximate nearest neighbor (ANN) search across sharded indexes. OpEx follows actual query volume rather than video frames, and bandwidth bills shrink by two to three orders of magnitude compared with streaming.

flowchart TD

A[Edge Architecture] -->|Front-load spend| B[CapEx]

B -->|Leads to low ongoing| C[OpEx]

D[Cloud-only Architecture] -->|Elastic spending shifts to| E[OpEx]

E -->|Can become persistent under| F[24/7 ingestion]

G[Hybrid Architecture] -->|Detection performed on Edge| H[Cloud performs ANN]

H -->|OpEx tied to actual| I[Reduced bandwidth costs]Flowchart illustrating the ROI and cost drivers associated with different architectures: Edge, Cloud-only, and Hybrid.

Storage and egress line items

- Continuous 1080p30 video typically consumes 2–8 Mbps per stream depending on encoding. With public cloud data transfer out commonly priced per gigabyte, continuous video-based ingest can turn egress into a dominant, recurring line item.

- Embedding-only uplink transmits “hundreds to a few thousand bytes per query” instead of megabits per second. Even at high decision rates, this reduces data movement by two to three orders of magnitude, turning egress from a top-three line item into a comparatively small expense.

- Local storage remains a modest cost driver for edge and near-edge, dominated by gallery index size and retention policies. On higher-end gateways, practical in-memory galleries reach into the hundreds of thousands of vectors; million-scale galleries push search to the cloud.

Operational efficiency and SLA implications

- Edge-centric designs minimize WAN dependencies, keeping SLAs tight by eliminating the longest pole—network transit. Organizations avoid performance cliffs during WAN congestion, and help desks see fewer escalations tied to connectivity.

- Cloud-only centralizes visibility and updates but is sensitive to jitter and backhaul variability; SLAs must absorb WAN round-trips and queuing under load.

- Hybrid meets SLAs with on-edge processing plus one WAN round-trip, provided regional points of presence and caching are in place.

Bandwidth Economics and Break-Even Models

Continuous video vs embedding-only uplink

- Streaming 1080p30 at 2–8 Mbps per stream drives substantial, continuous data transfer to the cloud. Where data transfer out is billed per gigabyte, these steady flows accumulate directly into egress charges.

- Embedding-only uplink is kilobytes per decision—“hundreds to a few thousand bytes per query.” This cuts payloads by two to three orders of magnitude versus continuous video, vastly reducing ongoing egress and making cost per decision more predictable. 📉

flowchart TD;

A[Continuous Video Streaming] -->|2-8 Mbps/stream| B[Substantial Data Transfer]

B -->|Leads to| C[High Egress Charges]

D[Embedding-Only Uplink] -->|Kilobytes/decision| E[Reduced Data Transfer]

E -->|Results in| F[Lower Egress Charges]

F -->|Leads to| G[Predictable Costs]

H[Always-On Multi-Camera] -->|Suggests| I[Edge or Hybrid Solutions]

I -->|Reduces Need for| J[Video Backhaul]

I -->|Maintains| K[Central Governance]Flowchart illustrating the economics of bandwidth in continuous video streaming versus embedding-only uplink, and the impact of always-on multi-camera solutions compared to bursty demand. It shows the relationship between data transfer rates, costs, and solutions for video management.

Always-on multi-camera vs bursty demand and pilots

- Always-on sites with many streams tip the scales toward edge or hybrid. Edge-only eliminates video backhaul; hybrid preserves central governance and million-scale search while keeping uplink tiny.

- Bursty or seasonal demand favors cloud elasticity. When cameras sit idle and decision volumes spike unpredictably, cloud-only can be cost-effective—especially in early pilots—because compute ramps with traffic and upfront CapEx remains low. However, as utilization climbs toward steady-state, bandwidth and egress costs dominate and erode the advantage.

Simple break-even intuition

- When a camera runs 24/7, every Mbps hurts. Reducing per-camera transfer from megabits per second to kilobytes per decision is the fastest route to TCO sanity. In practice, edge or hybrid typically wins for steady, multi-stream sites; cloud-only retains an edge for short-lived trials, rapid iteration, or low-duty-cycle programs where continuous ingest is limited.

- Compute is not the sole determinant. WAN reliability, policy restrictions, and index size shape where the search happens. If the gallery is small and policies demand data minimization, edge-only is compelling. If the gallery is very large or deduplication across sites matters, hybrid adds efficiency without reintroducing video backhaul.

Scaling Strategies and Governance

Million-ID galleries push toward hybrid or cloud

- Edge RAM constrains local index size. High-end gateways can hold roughly 100k–few hundred thousand vectors in memory depending on precision and metadata. Pushing past a million identities reliably requires sharded ANN indexes in the cloud.

- Hybrid keeps embeddings at the edge while the cloud handles sharded FAISS or similar search, with regional caches for “hot” identities. This maintains small payloads, avoids video transit, and sustains low-latency decisions with one WAN round-trip.

- Cloud-only also handles million to billion-scale galleries but reintroduces the steady-state cost of streaming crops or frames and increases exposure to WAN performance tails.

Risk, compliance, and governance as business variables

- Data minimization is a policy lever, not just a tech feature. Keeping templates and decisions on-device or sending only embeddings reduces the volume of personal data transmitted or stored centrally.

- GDPR emphasizes necessity and proportionality; CCPA/CPRA and BIPA place strict constraints on biometric identifiers. Architectures that minimize data flow and retention simplify Data Protection Impact Assessments (DPIAs), reduce breach blast radius, and streamline subject rights operations.

- Presentation attack detection (PAD) should be enforced at the edge when possible to stop attacks before data leaves the site, informed by recognized testing standards. Governance should explicitly cover watchlist creation, retention, access controls, audit trails, and fairness monitoring.

Procurement and rollout strategies

- Phase deployments: start with on-device or near-edge at one or two sites to lock in SLA and policy baselines. Add a regional point of presence and cloud search for cross-site deduplication as galleries grow.

- Leverage regionality: position cloud indexes by region to keep round-trip times moderate; use edge caches for frequently matched identities to amortize WAN latency.

- Vendor evaluation: prioritize support for edge-to-cloud split (embedding uplink), sharded ANN at scale, index compression options, and data governance tooling. Confirm egress policy alignment and transparent bandwidth metering in contracts.

Use-Case Segmentation and Decision Checklist

Access control and on-prem security

- Priorities: strict latency, privacy, and modest galleries.

- Preferred architecture: edge on-device or near-edge. Decisions stay local, PAD runs on-device, and templates are stored encrypted at rest. Backhaul carries only alerts or summaries.

Retail POS and floor analytics

- Priorities: low WAN overhead, predictable cost, site-level fusion across multiple cameras.

- Preferred architecture: near-edge with the option to add hybrid search later. Keep bandwidth trivial during pilots, then expand into sharded search if watchlists or cross-store deduplication become business-critical.

Transportation nodes and bandwidth-constrained sites

- Priorities: intermittent connectivity, resilience, small uplink windows.

- Preferred architecture: edge on-device with store-and-forward alerts. Periodically synchronize embeddings or watchlists when connectivity allows.

City-scale analytics and enterprise SOCs

- Priorities: million-scale galleries, cross-site deduplication, centralized governance.

- Preferred architecture: hybrid with regional points of presence. Edge handles detection/embedding/PAD; the cloud handles sharded ANN, policy enforcement, and global analytics while ingest remains kilobytes per decision.

At-a-glance comparison of cost levers

| Architecture | Bandwidth Profile | Compute Profile | Gallery Scale | Governance Footprint |

|---|---|---|---|---|

| Edge on-device | Alerts/metadata only | Device NPUs/GPUs | Up to ~100k–few hundred k | Minimal data transit; strong data minimization |

| Near-edge gateway | Alerts/metadata only | Site node consolidates streams | Larger per-site | Centralized per-site control |

| Hybrid edge–cloud | Embeddings/thumbnails only (KB/query) | Edge embeds + sharded cloud ANN | Million-scale | Balanced: minimized uplink + central policy |

| Cloud-only | Crops/frames; 2–8 Mbps per 1080p stream if continuous | Elastic GPUs/CPUs | Million to billion | Centralized; higher privacy risk without minimization |

Financial Sensitivity Analysis

Utilization

- As utilization rises toward 24/7, cloud-only costs scale with continuous ingest; bandwidth and egress grow into dominant line items. Edge and hybrid amortize CapEx and keep OpEx stable, improving cost per inference over time.

- For low-duty cycles or intermittent use, cloud-only remains attractive because compute spend aligns with limited traffic and CapEx is minimal.

Watchlist growth

- Small galleries fit at the edge and support the lowest OpEx. Beyond a few hundred thousand identities, hybrid takes over to avoid edge memory pressure and cold-start index loads. Persisting in cloud-only for large galleries is viable but carries continuous ingest costs.

Network variability

- Sites with congested Wi‑Fi or variable 5G uplinks benefit from edge-centric processing. Hybrid tolerates variability well because embeddings are small and resilient to jitter; streaming architectures suffer SLA erosion and cost unpredictability under the same conditions.

Decision Checklist for Executives

- Gallery size:

- ≤100k: favor edge on-device or near-edge for lowest bandwidth and latency.

- ≥1M: favor hybrid with sharded cloud ANN and edge caches.

- Concurrency:

- Many always-on cameras: avoid streaming; choose edge or hybrid.

- Bursty traffic/pilots: consider cloud-only for agility and low upfront cost.

- Policy and jurisdiction:

- Strict privacy regimes or unionized environments: prioritize data minimization via edge-first or hybrid with embedding-only uplink.

- High-assurance sites: enforce PAD on-device; ensure encryption at rest and robust audit trails.

- Network:

- Unreliable or expensive WAN: edge-only decisioning with store-and-forward.

- Governed networks with regional PoPs: hybrid for tight SLAs and million-scale search.

- Operations:

- Limited on-site IT: near-edge consolidation to simplify maintenance.

- Centralized SOC: hybrid with regional points of presence and clear egress controls.

Conclusion

The economics of real-time biometric identification have shifted from flops to flows. Moving detection and embedding to the edge and sending only compact vectors to the cloud collapses bandwidth by 100× or more, turning unpredictable network bills into manageable pennies while preserving fast, reliable SLAs. For steady, multi-camera sites, that’s the difference between a workable TCO and runaway Opex. For million-ID galleries and cross-site analytics, hybrid architectures preserve those gains while delivering elastic, sharded search and consistent governance. Cloud-only still shines for short-lived pilots and low-duty-cycle programs, but its steady-state egress and ingest costs compound quickly in production.

Key takeaways:

- Bandwidth is the lever: embedding-only uplink shrinks data transfer by orders of magnitude versus streaming video.

- Edge or hybrid wins for always-on, multi-camera sites; cloud-only fits bursty, short-lived workloads.

- Million-scale galleries point to hybrid—with regional PoPs and edge caches—for balanced performance and cost.

- Data minimization is both a compliance strategy and a cost strategy.

- Procurement should prioritize edge-cloud splits, sharded search, and clear egress terms.

Next steps:

- Quantify per-site bandwidth using 1080p30 at 2–8 Mbps per stream as a baseline, then model hybrid embedding volumes.

- Map gallery growth over 12–36 months to anticipate when hybrid becomes necessary.

- Align architecture with governance: DPIAs, retention, PAD posture, and subject rights operations.

- Pilot at one or two sites with edge-first, then layer in hybrid search and regional PoPs as watchlists and concurrency scale.

Organizations that make bandwidth a first-class metric—and architect for it—will realize faster deployments, cleaner governance, and materially lower TCO as biometrics move from pilots to production at scale.