C2PA Ingest to Perceptual Staydown: Engineering Moderation Pipelines for AI‑Generated Sexual Images at Scale

Global regulators have drawn a bright line around AI‑generated explicit images and non‑consensual intimate imagery (NCII): large fines, formal audits, and rapid takedown expectations now shape how platforms must build. With penalties reaching up to double‑digit percentages of global turnover and transparency duties that extend from provenance to labeling and appeals, the technology stack is no longer optional plumbing—it is the compliance surface.

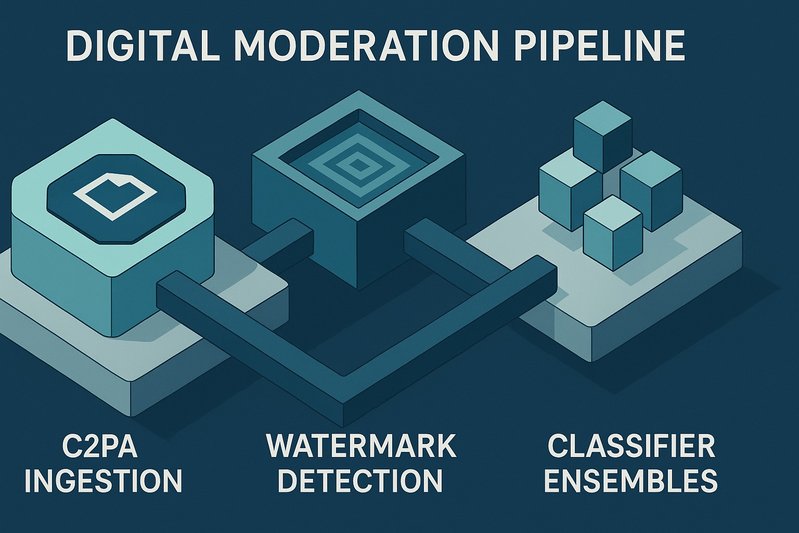

This blueprint details how to architect a production‑grade moderation pipeline for AI‑generated sexual content and NCIIs, from C2PA manifest ingestion and robust watermark detection to classifier ensembles, perceptual hashing for staydown, and age/consent verification under strict privacy constraints. The aim: show how to fuse provenance, detection, hashing, and verification into a decisionable, jurisdiction‑aware system with audit‑ready logs, minimal sensitive‑data exposure, and operational controls that stand up to regulators and red teams alike.

Readers will learn: a pragmatic end‑to‑end topology; how to propagate content credentials through transcoding; how to design classifier and watermark‑fusion confidence scores; what targeted staydown entails; and how to integrate age, identity, and consent verification—while meeting privacy‑by‑design expectations and cross‑region obligations.

Architecture/Implementation Details

End‑to‑end pipeline topology

A resilient moderation pipeline separates ingest, analysis, decisioning, and enforcement while ensuring privacy gates and auditability:

- Upload ingestion: An edge service accepts images/video, extracts any C2PA manifest, computes initial checksums, and routes to the processing graph. It must support user reports and trusted‑flagger inputs that reference existing content or URLs.

- Processing graph: A fan‑out DAG runs in parallel:

- Provenance layer: C2PA manifest verification; credential and signature validation; chain‑of‑custody extraction.

- Robust watermark detection: Multiple detectors for state‑of‑the‑art watermarking and GPAI detectability signals.

- Classifier ensemble: NCII and sexual deepfake models plus context/risk features. Human‑review routing for edge cases is built in by design.

- Perceptual hashing: Hash families generated for staydown, plus face‑match/identity workflows only when appropriate and consented.

- Decisioning services: Policy logic fuses multi‑signal outputs with jurisdictional flags to apply allow/label/restrict/remove decisions, and to trigger staydown registration.

- Moderation queues: Tiered queues for NCII emergency response, adult‑content review, and appeals. Reasoned outcomes and statements of reasons are logged for transparency and audit readiness.

- Enforcement and labeling: Client‑facing labels for AI‑generated/manipulated content, geofenced where required, paired with metadata plumbing that persists across derivatives and transcodes.

This separation supports lawful, proportionate proactive measures while avoiding prohibited “general monitoring” approaches. Where services face the strictest risk and audit scrutiny, the architecture must also expose artifact‑level logs and decision rationales for periodic independent audits.

C2PA manifest ingestion and credential validation

Platforms increasingly encounter content with provenance credentials. The ingest service should:

- Parse C2PA manifests at upload; verify signatures, certificate chains, and tamper evidence.

- Extract model‑level disclosure (e.g., declared AI generation or manipulation) and bind these facts to the asset’s internal record.

- Persist a normalized provenance record, and propagate it through transcoding by embedding credentials into derivatives where technically feasible and policy‑permitted.

- Expose a consistent API/SDK so downstream services (rendering, sharing, export) can read and display provenance and labels.

C2PA is not universal, so the system must never treat its absence as proof of authenticity. It is one signal among many, but a powerful one for user‑facing disclosures and audit trails.

Robust watermark detection and signal fusion

Watermarks from model providers and GPAI detectability measures are now expected. Practical implementation requires:

- Multiple detectors: Run diverse detectors to mitigate vendor/model drift. Treat detection as probabilistic, not binary.

- Transform robustness: Evaluate detectors on common transformations (resize, crop, recompress); specific metrics unavailable.

- Fusion with model fingerprints and classifiers: Combine watermark likelihood with ensemble classifier outputs and provenance disclosures to produce a calibrated confidence score.

- Adversarial resilience: Include checks for watermark‑stripping artifacts, and route low‑confidence or adversarial‑suspect cases to human review.

Confidence scoring should be monotonic and explainable. A transparent rubric—e.g., “provenance disclosure + strong watermark + classifier consensus”—helps justify decisions and reduces appeals churn.

Classifier ensembles for NCII and sexual deepfake detection

Obligations to mitigate deepfake and NCII harms make proactive detection proportionate for high‑risk services. Engineering choices:

- Multi‑head ensembles: Separate heads for nudity/sexual context, face‑swap/manipulation cues, and NCII indicators. Avoid single‑model dependence.

- Precision/recall balancing: Tune per‑queue. NCII emergency queues may tolerate higher false positives to maximize victim protection, while general feeds require tighter precision. Specific metrics unavailable.

- Human‑in‑the‑loop: Mandate review for borderline scores, public‑figure cases, and conflicting signals. Maintain victim‑authenticated channels that can authorize face matching for takedown/staydown.

- Documentation and audits: Record training sources, thresholds, and calibration procedures to substantiate claims about efficacy.

Perceptual hashing for targeted staydown

Targeted staydown—blocking re‑uploads of the same adjudicated illegal image/video—is foundational for NCII response.

- Hash families: Generate multiple perceptual hashes (e.g., robust image/video fingerprints) per asset to balance collision and evasion risks; specific algorithms and metrics unavailable.

- Adjudication gates: Only material removed as illegal or policy‑violating after due process enters the staydown index.

- Jurisdiction and geofencing: Apply staydown regionally when legality differs by country or state and when disclosures or takedown windows are jurisdiction‑specific.

- Privacy and minimization: Store hashes and minimal metadata, not the underlying biometric vectors unless strictly necessary and consented. Enforce deletion schedules aligned to retention policies.

Comparison Tables

Detection and authenticity tools at a glance

| Technique | Primary purpose | Strengths | Limitations | Best use in pipeline |

|---|---|---|---|---|

| C2PA content credentials | Verify provenance; disclose AI generation/manipulation | Cryptographically verifiable; human‑readable disclosures; survives many transforms when propagated | Not universally present; relies on ecosystem support | Ingest verification; user‑facing labels; audit trail |

| Robust watermarks / GPAI detectability | Signal AI‑generated origin | Low overhead at inference; complements provenance | Vulnerable to stripping or heavy edits; probabilistic detection | Parallel detector stage; fused into confidence scores |

| Classifier ensembles (NCII/deepfake) | Identify manipulated/explicit content and NCII risk | Proactive screening; tunable thresholds; human‑in‑the‑loop | Drift over time; adversarial inputs; requires governance | Core processing graph; risk‑tiered queues and appeals |

| Perceptual hashing (staydown) | Prevent re‑uploads of adjudicated content | Efficient at scale; avoids re‑review; geofencable | Collisions/evades possible; careful adjudication required | Post‑decision enforcement; cross‑service coordination |

Best Practices

Age, identity, and consent verification without over‑collection

Sexual content platforms must combine age gating and performer/uploader checks while minimizing sensitive data processing.

- Age‑assurance: Gate access to pornography with robust age‑assurance. Build a vendor‑agnostic interface so methods can evolve without re‑architecting.

- Performer identity and age: For actual sexually explicit content, verify performers’ identities and ages and maintain compliant records and custodian‑of‑records notices. Design upload flows that clearly separate purely synthetic content from actual content to avoid mislabeling.

- Consent capture and revocation: Collect explicit consent from performers; provide revocation pathways and link them to moderation queues for rapid enforcement.

- KYU/KYV proportionality: Apply verification based on risk and role (e.g., high‑risk uploaders). Minimize data fields and implement strict access control, encryption at rest, and short retention for sensitive artifacts.

Privacy‑by‑design in moderation

Across regions, processing sexual and biometric data requires a lawful basis, transparency, minimization, and security:

- Lawful basis and DPIAs: Document legal bases and conduct data protection impact assessments for high‑risk processing (e.g., face matching, sensitive content classification).

- Minimization and deletion: Prefer hashes and low‑resolution transforms over raw faces. Establish deletion schedules for all artifacts, including hashes and telemetry, aligned to purpose limitation.

- Access controls and audit trails: Enforce role‑based access, need‑to‑know policies, immutable decision logs, and statement‑of‑reasons records.

- International transfers: Use approved transfer mechanisms for cross‑border signal sharing and ensure regional data residency when required.

Labeling and disclosure services

User‑facing transparency is no longer optional for deepfakes and AI‑manipulated sexual content.

- Persistent labels: Display conspicuous, contextual labels when content is AI‑generated or manipulated. Bind labels to the asset and propagate through share, embed, and derivative workflows.

- Context and harm reduction: Combine labels with reduced reach to minors and safer recommendation defaults in high‑risk contexts.

- Geofenced compliance: Trigger labels or disclosures where election‑period rules or jurisdiction‑specific mandates apply.

Cross‑region architecture and feature flags

One global codebase must behave differently by region.

- Data residency: Partition indices (e.g., perceptual hashes, audit logs) when regional residency is required; design minimal cross‑region replication.

- Jurisdiction‑aware feature flags: Toggle proactive screening levels, label text, and takedown windows by locale.

- Representation and access: Support regulators’ data access requests while protecting user privacy via strict audit logging and scoped data exports.

Observability, performance, and audit integrity

- Latency budgets: Set per‑stage budgets so end‑to‑end detection and decisioning can support rapid takedown and removal‑notice compliance; specific latency metrics unavailable.

- Throughput scaling: Auto‑scale the processing DAG; use backpressure and prioritized queues for emergency NCII cases.

- Audit log integrity: Cryptographically chain decision logs where feasible; retain structured statements of reasons and evidence snapshots within retention limits.

- SLOs: Define SLOs for time‑to‑takedown, appeal resolution, and staydown effectiveness; continuously monitor drift in classifiers and watermark detectors.

Security and Abuse Hardening 🛡️

Adversaries will strip watermarks, perturb classifiers, and attempt to poison staydown indices. The engineering response blends prevention, detection, and response.

- Watermark‑stripping countermeasures: Detect distribution shifts consistent with aggressive recompression/cropping; fuse with other signals rather than relying on watermarks alone.

- Adversarial testing: Red‑team pipelines with common attacks (JPEG abuse, resampling, blur/noise, GAN‑based remastering). Maintain a risk register and model‑specific playbooks.

- Model and label drift monitoring: Track precision/recall on curated validation sets; trigger retraining and recalibration workflows. Specific benchmarks unavailable.

- Targeted staydown hygiene: Require dual‑review before adding to the staydown index; support reversible unblocking on successful appeals; maintain region tags to honor local legality.

- Vendor governance: Contract for model documentation, watermarking support, and content‑credential APIs. Require attestations for detectability features and update cadences.

- Crisis response: Pre‑define incident playbooks for viral NCII or sexual deepfake campaigns, including containment measures, escalation paths, and regulator communication protocols.

Conclusion

Engineering moderation for AI‑generated sexual images at scale is now a compliance‑critical discipline. A defensible system fuses provenance (C2PA), robust watermark detection, classifier ensembles, and perceptual hashing into a multi‑signal pipeline with jurisdiction‑aware policies, auditable decisions, and privacy‑by‑design controls. It also mandates rigorous age/identity/consent verification for adult uploads, conspicuous labeling for AI‑generated or manipulated content, and targeted staydown that prevents re‑uploads once content is adjudicated. The teams that succeed will treat this as a living program: continuously tested, measured, and adapted to evolving guidance and abuse tactics.

Key takeaways:

- Build a parallel processing graph that ingests provenance, detects robust watermarks, and runs classifier ensembles, feeding an explainable decisioning layer.

- Use perceptual hashing for targeted staydown with strict adjudication gates, regional tagging, and minimal data retention.

- Implement age/identity/consent verification workflows that meet legal duties without over‑collecting sensitive data.

- Enforce privacy‑by‑design: lawful bases, DPIAs, minimization, access controls, and deletion schedules across all artifacts.

- Harden against adversaries via red‑team tests, drift monitoring, and vendor governance for watermarking and detectability.

Next steps: inventory your current signals and gaps; stand up C2PA verification and label propagation; calibrate a minimal yet effective ensemble and watermark‑fusion stage; operationalize a targeted staydown index; and run a DPIA that maps every artifact to a lawful basis, retention, and access policy. From there, iterate on metrics, red‑team results, and jurisdiction‑specific flags—because by 2026, “good enough” detection won’t be enough.