Ambient Agents, Attested Edge Clouds, and On‑Device Multimodality Set the Next Two Years of Mobile AI

An innovation roadmap for privacy‑preserving assistants, energy‑aware models, and regionally adapted stacks

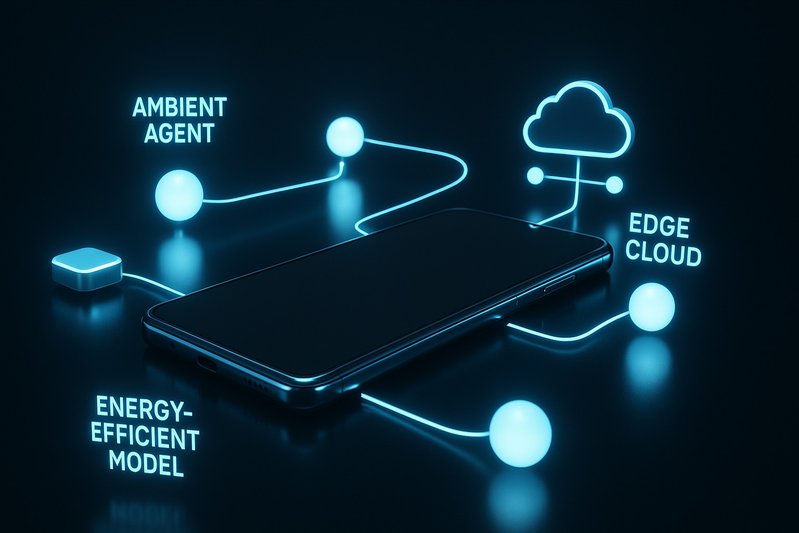

By early 2026, smartphone AI has shifted from feature add‑on to foundational fabric—especially at the premium tier—reshaping how users write, translate, capture, and edit across the system. The most visible gains are material: assistants that work across apps, multimodal camera and video pipelines, and real‑time communication that holds up even when networks don’t. What now separates leaders is less “who has AI” and more “who ships trustworthy, on‑device‑first experiences with consistent default‑app coverage, clear privacy posture, and predictable energy behavior.” This piece lays out the innovation roadmap that will define the next two years: ambient agents with persistent context and privacy guarantees, attested edge clouds for verifiable offload beyond a single platform, on‑device multimodality aimed at real‑time video understanding, and energy‑first design anchored by modern NPUs. Readers will learn what to watch in attestation APIs, MLPerf Mobile trajectories, and NPU roadmaps; which UI patterns earn trust; how regional stacks diverge and converge; and what OEMs must do now to broaden assistant coverage without burning battery or user goodwill.

Research Breakthroughs

From feature to fabric: assistants that act across apps with persistent context and privacy guarantees

System‑level assistants are escaping siloed apps and acting where users already work. Apple Intelligence brings writing tools—summarize, rewrite, proofread—inline across iOS, with a more contextually capable Siri. On Android, Gemini is embedded at multiple layers, with Gemini Nano powering on‑device workflows like Recorder summaries and smart replies on Pixel 8 Pro. Samsung’s Galaxy AI turns cross‑app actions into muscle memory: Circle to Search works from any screen, Live Translate spans calls and in‑person conversations, and Note/Transcript Assist meet users in default apps instead of asking them to copy/paste or app‑hop.

flowchart LR;

A[User] -->|uses| B[Apple Intelligence]

A -->|uses| C[Android Gemini]

B -->|provides| D[Writing Tools]

C -->|powers| E[On-device Workflows]

B -->|interacts with| F[Siri]

C -->|supports| G[Smart Replies]

H[Galaxy AI] -->|enables| I[Circle to Search]

H -->|integrates| J[Live Translate]

H -->|supports| K[Note/Transcript Assist]This flowchart illustrates the interconnected functionalities of various AI systems and assistants across different platforms, showcasing how users engage with these technologies for enhanced productivity and seamless interactions.

Privacy signaling now travels with capability. Apple’s model leads: when tasks exceed on‑device capacity, Private Cloud Compute offloads to verifiably hardened servers built on Apple silicon, with cryptographic attestation and transparency commitments. Samsung anchors Galaxy AI within its Knox platform, pairing on‑device modes for sensitive workflows with enterprise‑grade controls and hardware‑backed key storage. Google exposes clear prompts and settings to differentiate on‑device handling from cloud features and defend with Android sandboxing. The pattern is clear: assistants must be systemwide and contextual, but their success will be judged by how predictably they stay local—and how credibly they prove it when the cloud must help.

Asus underscores an adjacent thread: the value of robust offline capability. Zenfone 12 Ultra integrates an on‑device Meta Llama 3‑8B for summarization and positions all Asus‑developed tools—document scan, transcript, article summary—to run locally, with optional cloud for heavy lifts. ROG Phone’s gaming‑centric AI (X Sense, X Capture, AI Grabber) favors on‑device recognition for low latency. This local‑first stance mitigates the day‑to‑day risk of “feature failed” errors in weak networks while strengthening privacy by default.

Standardizing trust: attested offload and verifiable compute moving beyond a single platform

Verifiable, privacy‑preserving offload is now a competitive differentiator, not a checkbox. Apple’s Private Cloud Compute established a high‑assurance blueprint: off‑device execution in sealed, attestable environments on vendor‑controlled hardware. Samsung and Google outline robust on‑device defaults and clear UX controls for when network or account data flows. The next two years should push attested compute beyond a single platform, with API‑level signals that any app or assistant can query: which model ran, where it ran, and under what attestation. Expect enterprise and regulated sectors to demand portable attestations and policy hooks that span OSes. The test won’t be the marketing label—it will be whether users and admins can verify, predict, and audit how data leaves the device, if it ever does.

On‑device multimodality: toward real‑time video understanding and generative refinement on handset silicon

Cameras and video pipelines are turning into real‑time semantic engines. Pixel keeps blending device‑side semantics with cloud‑accelerated edits like Magic Editor and Video Boost, depending on feature constraints. Samsung’s Generative Edit and cross‑app assistive features compress steps from capture to polish. Asus leans into creator workflows on‑device with AI Magic Fill, Unblur, and Portrait Video 2.0, plus AI Tracking to stabilize and focus the action.

The trajectory is toward more of this happening live on handset silicon. Modern NPUs/APUs and schedulers, combined with energy‑efficient operator libraries, move still‑image edits, summarization, and translation comfortably on‑device. Heavy generative video remains challenging: long edits can be energy‑intensive and are often deferred to cloud pipelines. But the boundary is shifting as device‑side inference throughput improves and software stacks co‑schedule ISP/NPU/GPU to avoid stalls. The pragmatic goal over the next cycles: real‑time recognition and light‑touch generative refinement locally, with transparent, attested offload for the few tasks that still exceed thermal and energy budgets.

Roadmap & Future Directions

Energy as a first‑class constraint: what scales, what waits

Battery and thermals define the ceiling for mobile AI. Benchmarks such as MLPerf Mobile show generation‑over‑generation gains in inference throughput and latency, enabling interactive on‑device workloads that previously needed the cloud. Vendor disclosures on NPU capability and efficiency, alongside optimized operator libraries, reduce power per task. The lived outcome: still‑image edits, text summarization, and translation carry modest battery impact on recent flagship silicon, while long generative video edits remain expensive.

What’s next:

- On‑device first, with smart deferral: Keep short, interactive tasks local; queue heavy, non‑urgent jobs for attested offload or idle, plugged‑in windows.

- System schedulers that treat energy as a product requirement: Coordinate ISP/NPU/GPU bursts to avoid thermal cliffs that degrade AI mid‑task.

- Model/tooling improvements focused on power efficiency: Continue compressing power per token, frame, and edit through software and scheduling advances, even when full workloads cannot yet be local.

The north star is consistency. Users perceive trust as much through stable latency and battery steadiness as through raw speed—and they judge reliability by whether AI features complete predictably in poor signal conditions.

Milestones to track: MLPerf Mobile trajectories, NPU roadmaps, attestation APIs

A credible forward watchlist includes:

- MLPerf Mobile progress: Track how device‑class inference evolves across text and vision tasks to infer what can shift on‑device next.

- NPU roadmaps: New waves from Snapdragon 8‑class and Dimensity 9300‑class platforms anchor the cadence for local‑first features; capability improvements often arrive in tandem with more efficient schedulers.

- Attestation and privacy APIs: Watch for OS‑level signals that tell users and apps where a task ran and under what guarantees, building on the bar set by Private Cloud Compute.

Regional divergence and convergence: local LLM partnerships, compliance, cross‑border portability

Feature breadth increasingly lands everywhere, but the back‑ends differ. Xiaomi’s HyperOS embeds assistant functions across camera, gallery, and system utilities with system scheduling tuned for efficiency, while Xiaomi 14 Ultra’s camera stack pushes computational photography with pro‑grade options. Oppo’s ColorOS integrates AI eraser/editing, transcription and summarization aids, and resource orchestration engines, and regional assistants adapt to local regulations and expectations. China‑market builds often integrate domestic services to comply with local requirements, aiming for parity in user‑visible capabilities.

In North America, Europe, and Japan, expectations tilt toward Apple/Google/Samsung’s privacy stories, default‑app polish, and enterprise‑friendly signals such as Knox. Cross‑border portability will matter more as travelers and multinational users expect consistent privacy and performance guarantees when devices roam—an argument for standardized attestation and predictable on‑device modes regardless of market. The convergence is functional parity on common tasks like rewrite/summarize, translation, and photo edits; the divergence is in privacy guarantees, service integrations, and how visibly those guarantees are communicated.

Impact & Applications

Safety, reliability, and explainability: UI patterns that earn confidence

Trust is won through design choices users can feel:

- Clear boundaries: Inline writing tools on iOS and Android make it obvious when processing is on‑device versus invoking cloud resources, with prompts and settings that explain trade‑offs.

- On‑device default modes: Live Translate on Samsung offers local processing paths; Pixel’s Recorder summaries complete locally in most cases, avoiding “feature failed” moments in poor connectivity.

- Attested offload when necessary: Apple’s Private Cloud Compute explicitly states when a task leaves the device and under what protections, meeting the bar for privacy‑sensitive and enterprise users.

Explainability in mobile AI doesn’t require dense citations; it calls for predictable behavior, visible status, and recoverable outcomes. If a feature can’t complete locally, it should tell you, ask permission to offload, and return a result with the same predictable latency profile. Confidence grows when users see consistent completion times, understand what data moves where, and can opt out without losing core functionality.

Measuring what matters next: user‑perceived trust, latency stability, failure predictability

Speed and accuracy alone no longer capture UX quality. A practical lens is the “5‑S” framework:

- Speed: Fewer steps and less app‑hopping via cross‑app assist.

- Success rate: Offline availability and graceful degradation reduce failures in bad networks.

- Satisfaction: Coverage in default apps drives discovery and habit.

- Security trust: Architectural assurances—on‑device first, attested offload—anchor confidence.

- Energy cost: Tasks must land within everyday battery budgets.

To refine this lens, two complementary measures matter:

- Latency stability: Not just median speed, but variance under load, weak signal, and thermal stress.

- Failure predictability: Clear states when a task will succeed offline versus defer, and intelligible fallbacks.

External battery testing across realistic mixes provides useful context for endurance under AI use, though specific figures vary by device and workload. The goal for the next two years: make AI outcomes as predictable as tapping the camera shutter—fast, consistent, and with known trade‑offs.

Roadmaps for OEMs: deeper default‑app coverage, lifecycle commitments, assistant breadth

The next two years will reward execution detail:

- Broaden default‑app coverage: Put explain/translate/summarize where users already live—notes, keyboard, camera, phone, recorder—mirroring how Galaxy AI and Apple’s systemwide writing tools drive daily use.

- Commit to local‑first: Prioritize on‑device for privacy‑sensitive voice and personal text; reserve attested offload for heavy, user‑approved tasks.

- Ship transparent controls: Make on‑device vs cloud choices explicit and recallable, with enterprise policy hooks where relevant.

- Tune for personas: Asus demonstrates the power of focused value—creator‑leaning on‑device summarization on Zenfone and latency‑critical recognition for gaming on ROG—while leaders like Apple, Google, and Samsung showcase breadth. Choose a center of gravity and execute deeply.

- Lifecycle and stability: Sustained updates that improve on‑device models and schedulers post‑launch can meaningfully lift speed, reliability, and energy without new hardware.

Risks and breakpoints: model errors, privacy missteps, and battery drain

Three fault lines will test mobile AI maturity:

- Incorrect or overconfident outputs: Generative systems can produce wrong or misleading results; specific rates are unavailable, so design for verification and easy rollback.

- Privacy gaps in offload: Without attested, vendor‑controlled environments and clear disclosures, off‑device processing erodes trust—especially in regulated contexts.

- Battery and thermals: Long generative video edits and other heavy jobs can tip devices into thermal throttling or visible drain; practical UX must route them to cloud or idle windows, with explicit user choice.

The resilient path is to keep interactive tasks on‑device, communicate when and why offload occurs, and give users control over battery‑vs‑quality trade‑offs.

Conclusion

Mobile AI’s next phase isn’t about invention in isolation; it’s about integration with guarantees. Assistants are turning from features into fabric—present in any app, carrying context, and staying local by default. When devices must reach for extra horsepower, attested edge clouds show how to preserve privacy without pausing progress. Cameras and video pipelines continue their drift toward real‑time semantic understanding on‑device, bounded by energy realities that smart schedulers and ever‑improving NPUs steadily relax. The winners will measure what users actually feel—trust, stability, and endurance—and design their roadmaps around those outcomes.

Key takeaways:

- Ambient agents need persistent context and explicit privacy guarantees to earn daily trust.

- Attested offload is moving from differentiator to expectation; standardized APIs should follow.

- On‑device multimodality will expand in text, image, and short‑form video; heavy generative edits remain candidates for cloud.

- Energy is the limiting reagent; design for consistent latency and battery steadiness, not just peak speed.

- Regional stacks will converge on features but diverge in back‑ends; portability of trust signals matters.

Next steps for builders and buyers:

- Builders: Prioritize default‑app coverage, on‑device defaults, and transparent controls; invest in attestation pathways and scheduler tuning; track MLPerf Mobile and NPU roadmaps to plan capability shifts.

- Buyers: Look for clear privacy architecture, reliable offline modes, and consistent latency under real conditions; assess assistant breadth where you actually work—notes, camera, calls, and recorder.

Looking ahead, watch three dials: MLPerf Mobile trajectories for what stays on‑device, NPU generations for how fast and efficient it gets, and attestation APIs for how safely the rest flows. If those dials move in concert, ambient, privacy‑preserving assistants will feel less like novelty and more like the default way phones think and act. 🔋